Oculus Chief Scientist Predicts the Future of VR Platform Tech

October 10, 2016

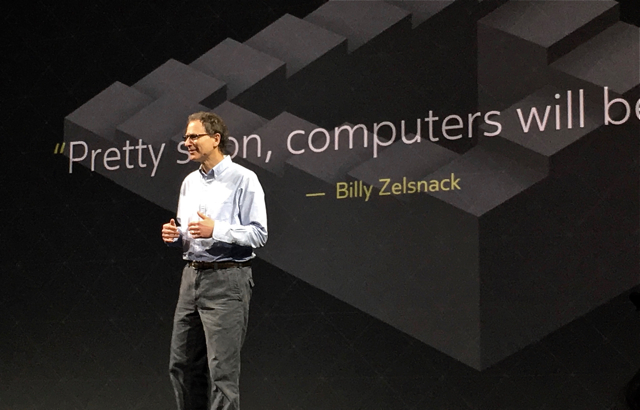

Disavowing popular wisdom that it doesn’t pay to be too specific, Oculus chief scientist Michael Abrash delineated what virtual reality tech will look like in 2021 at the Oculus Connect 3 conference last week. With the caveat that he would be proved “wrong about some of the specifics,” Abrash described a high-end VR future that includes 4K x 4K resolution per eye and 140-degree field of view displays, foveated rendering, personalized audio encoding, and “augmented virtual reality.” Abrash was the final speaker in a keynote session that stretched past two hours.

Starting with VR optics and displays, the technology that put Oculus on the map, Abrash said that in five years, panel resolution will be 4K x 4K per eye as compared to today’s 1200 x 1800 and field of view will expand from 90-degrees to 140-degrees. Because there is a trade-off between field of view and pixel density, and field of view is material to creating presence, pixels per degree will only double from 15 to 30. Abrash also predicted the introduction of flexible depth of focus. The image won’t be 20/20, but it will be clear enough “to pass the driver’s test,” he concluded.

“Foveated rendering will be a core VR technology,” continued Abrash, significantly reducing the number of pixels required to maintain a 90 fps frame rate and to produce quality graphics. Only a very small area of the eye — the fovea — sees full resolution and foveated rendering varies the pixel density across the scene to match this mechanism.

Nevertheless, eye-tracking “at the level you need for foveated rendering, is not a solved problem,” warned Abrash, who described the challenge of accurate and robust eye-tracking as the “single greatest risk factor for his predictions.”

Audio solutions “are more straight forward” and in 2021, users will be able to “quickly and easily generate a personalized head related transfer function — or HRTF,” which describes how sound bounces off and moves around the head and ears. In addition, Abrash believes that technologies that model how sounds reflects, diffracts and interferes in space will emerge and they will be applied to virtual worlds in a few limited scenarios.

Moving on to interfaces Abrash proclaimed, “It is quite likely touch will be the mouse of VR,” and remain the primary interaction technology for complex interactions in virtual space. While he sees the potential for direct hand manipulation, he said the necessary kinematic and haptic technologies are not “even on the direct horizon.”

For now, hand-tracking and gesture-based interfaces will be limited to simple tasks such as choosing to watch a movie or typing on a virtual keyboard, and modeled to create believable avatars for social VR.

Finally, Abrash predicted that, “we won’t be walking onto a holodeck anytime soon.” Instead, the lines between the real and virtual worlds will become increasingly blurred. He drew a picture of mixed reality that he dubbed “augmented virtual reality,” one that differs from current AR experiences supported by see-through glasses.

Augmented virtual reality brings the real world into VR and users will be able to manipulate both in anyway they want, at will. Abrash described an experience “where we can move around safely and confidently, pick up coffee mugs, see who just came into the room, be anywhere on earth where we want to be, and interact with anyone on the planet.”

One thing that Abrash predicted will not occur anytime soon is the development of completely convincing digital humans. Nevertheless, real-time 3D capture and machine learning will advance sufficiently in five years to produce avatars for meaningful and profound social experiences in VR.

No Comments Yet

You can be the first to comment!

Sorry, comments for this entry are closed at this time.