Cerebras Chip Tech to Advance Neural Networks, AI Models

August 25, 2021

Deep learning requires a complicated neural network composed of computers wired together into clusters at data centers, with cross-chip communication using a lot of energy and slowing down the process. Cerebras has a different approach. Instead of making chips by printing dozens of them onto a large silicon wafer and then cutting them out and wiring them to each other, it is making the largest computer chip in the world, the size of a dinner plate. Texas Instruments tried this approach in the 1960s but ran into problems.

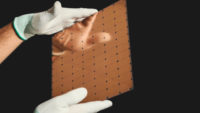

The New Yorker reports that, with Cerebras’s printing system, which was developed in partnership with Taiwan Semiconductor Manufacturing Co. (TSMC), “the wiring lines up … [and] the result is a single, ‘wafer-scale’ chip, copper-colored and square, which is twenty-one centimeters on a side.”

In comparison, the largest GPU is a bit less than three centimeters across. Cerebras produced the Wafer-Scale Engine 1, its first such chip, in 2019. This year, it debuted the WSE-2 (pictured above), which “uses denser circuitry and contains 2.6 trillion transistors collected into eight hundred and fifty thousand processing units, or ‘cores’.”

Cerebras co-founder and CEO Andrew Feldman said the design’s benefits include faster communication among the cores, better handling of memory, and allowing cores to work more quickly on different data. To address the issue of yield, Cerebras made all the cores identical so that if “one cookie comes out wrong, the ones surrounding it are just as good.”

Although a “typical, large computer chip might draw three hundred and fifty watts of power … Cerebras’s giant chip draws fifteen kilowatts,” which Feldman said is the most that anyone has delivered to a chip. That makes cooling crucial; “three-quarters of the CS-1, the computer that Cerebras built around its WSE-1 chip, is dedicated to preventing the motherboard from melting.” Rather than fans, the CS-1 uses water to cool the processors.

In terms of speed, “the closest thing to an industry-wide performance measure for machine learning is a set of benchmarks called MLPerf, organized by an engineering consortium called MLCommons.” Nvidia’s GPUs hold the highest scores, but “Cerebras has yet to compete.”

“What you never want to do is walk up to Goliath and invite a sword fight,” Feldman said. “They’re going to allocate more people to tuning for a benchmark than we have in our company.”

He added that, “a better picture of performance comes from customer satisfaction.” Because the CS-1 is priced at about $2 million, “the customer base is relatively small, although it is being used at the Lawrence Livermore National Laboratory, the Pittsburgh Supercomputing Center, and EPCC, the supercomputing center at the University of Edinburgh — as well as by pharmaceutical companies, industrial firms, and ‘military and intelligence customers’.”

AstraZeneca reported it used a CS-1 “to train a neural network that could extract information from research papers,” and stated it could complete in two days what would take “a large cluster of GPUs” two weeks.” The U.S. National Energy Technology Laboratory stated that its CS-1 “solved a system of equations more than two hundred times faster than its supercomputer, while using ‘a fraction’ of the power consumption.” Cerebras will debut the CS-2 later this year.

Related:

A New Chip Cluster Will Make Massive AI Models Possible, Wired, 8/24/21

Synopsys CEO: AI-Designed Chips Will Generate 1,000X Performance in 10 Years, VentureBeat, 8/23/21

Intel Lands Pentagon Deal to Support Domestic Chip Making, The Wall Street Journal, 8/23/21

Samsung to Invest $205 Billion in Chip, Biotech Expansion, The Wall Street Journal, 8/24/21

No Comments Yet

You can be the first to comment!

Sorry, comments for this entry are closed at this time.