Consistency Is Key: Lessons on Generative AI via ‘The Bends’

September 23, 2025

In less than three years, generative AI has evolved from an experimental toy to a regular presence in studio pitches, previs workflows, and even the festival circuit. Yet one challenge has stymied the full adoption of generative AI in long-form storytelling: establishing and maintaining control over outputs. This challenge also fuels many of the anxieties surrounding the use of artificial intelligence in media production. How can artists maintain their creative voice when a machine is doing all the artistic work, and often doing so with inconsistent results? The Entertainment Technology Center at USC set out to tackle these and related challenges with a new film project, “The Bends.”

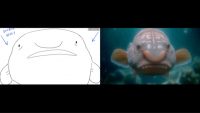

Let’s say you’re making a short film about a recognizable animal whose physical form influences the character’s personality traits, like a blobfish, which was the protagonist for “The Bends.”

How do you ensure that your protagonist not only resembles a blobfish but also retains its unique traits and quirks across successive shots? Furthermore, how do you maintain this same consistency in the environment from shot-to-shot so it doesn’t look like your character is swimming in the depths of the Atlantic Ocean one moment, then suddenly splashing in someone’s swimming pool the next?

Maintaining stylistic control requires more sophisticated tools and more complex data beyond simple prompt engineering and a few reference images of a blobfish. There are a variety of tools that the ETC deployed on “The Bends” to ensure both stylistic consistency and creative control across the film.

ControlNet

ControlNet is a tool that helps condition an output in a text-to-image diffusion model with greater precision than the text prompt itself. ControlNet extracts specific attributes from an input image and preserves them in subsequent generations.

Various ControlNet models isolate different attributes. For example, Adapter ControlNet samples depth information from an image and, together with a text prompt, replicates that same depth information across all outputs.

ControlNets were essential in executing artist-driven workflow for “The Bends.” The storyboard was fed into ControlNet — and the resulting output preserved the framing, character placement, setting, and shot length, while text prompts directed the model to fill in the remaining data to arrive at the final image.

LoRA (Low-Rank Adaptation)

LoRAs are also used to establish control but are fundamentally different than ControlNets. Diffusion models like Stable Diffusion are trained in massive datasets, but they are not trained on every possible datapoint. This is where LoRAs come in.

LoRAs are small, specialized models that can be trained on focused datasets, like a blobfish or a deep-sea environment, to generate results that more accurately reflect distinct characteristics of the input images. Otherwise, the outputs of a stable diffusion model alone can tend to look like a generic fish or seafloor that does not exhibit the same uniformity across the final film.

Both ControlNets and LoRAs extract and map certain characteristics of inputs consistently across outputs, but ControlNets extract data from individual image inputs to guide certain elements in an output, whereas LoRAs are datasets that are used to establish the overall style of an image or character.

But where does the data for training a LORA come from?

Synthetic Data

For “The Bends,” the challenge was not only its unique characters and settings but also a required stylistic blend of realism and fantasy. No existing dataset captured that blend, so new data had to be created.

Creating synthetic data begins with a single image created under the direction of the creative team in a chosen style or setting. A diffusion model then generates matching images, iteratively expanding the set until an entire synthetic database exists in that same style and narrative world.

This information can be used to train LORAs to generate outputs that maintain creative fidelity from the original concept art and remain consistent across shots.

Synthetic data can encompass everything from general environments to subtle effects, such as recurring lens distortions that heighten realism. It was this approach that allowed the blobfish to remain grounded in its deep-sea habitat and for the visual language of the original concept art to carry through the finished film.

Merging 3D and AI

The final technique used on “The Bends” involved projecting AI outputs onto 3D elements. Scenes were broken down into simple 3D primitives such as cubes and spheres. Generative AI then acted like a render engine, retexturing these shapes to create the final elements of the scene.

This is one of the more efficient ways in which to achieve world consistency, or consistency across background and foreground elements within a series of shots.

Maintaining uniformity across characters, environments, and scenes remains one of the greatest challenges in the adoption of generative AI for long-form content. Yet tools and techniques like the ones employed on “The Bends” are steadily restoring authorship to the artist. Rather than dictating style, these tools safeguard intent, making the filmmaker’s vision the organizing principle of the work.

For “The Bends,” the central aim was to discover how generative AI could serve as a partner in storytelling — efficient enough to streamline production, yet disciplined enough to preserve creative intent.

No Comments Yet

You can be the first to comment!

Leave a comment

You must be logged in to post a comment.