Nvidia Introduces AI-Powered GPUs and Cloud LLM Services

September 22, 2022

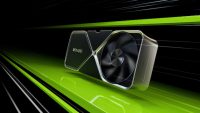

“Computing is advancing at incredible speeds. Acceleration is propelling this rocket, and it’s fuel is AI,” Nvidia founder and CEO Jensen Huang said in his 2022 GTC conference keynote, announcing two new AI services: the Nvidia NeMo large language model service, which helps customize LLMs, and the Nvidia BioNeMo LLM service, aimed at bio researchers. Nvidia also unveiled its GeForce RTX 40 Series GPUs, shipping Q4. Powered by the company’s new architecture, Ada Lovelace, the two new models — GeForce RTX 4090 and GeForce RTX 4080 — offer better ray tracing performance and AI-based neural graphics.

Ada’s extremely fast fourth-generation Tensor Cores increase throughput by up to 5X, to 1.4 Tensor-petaFLOPS using the new FP8 Transformer Engine, Nvidia says.

Ray tracing gets a boost thanks to new third-generation RT Cores, and the introduction of new AI-powered rendering techniques, Shader Execution Reordering and DLSS 3, reports Engadget, noting that Nvidia worked with TSMC to co-develop the new “4N” fabrication spec used on the 40 series, which is “up to two times more power efficient than the 8nm process it used for its 30 Series cards.”

“The top-of-the-line RTX 4090 will cost $1,599 and go on sale October 12,” writes Bloomberg, noting that “the high-end version of the new chip will have 76 billion transistors and will be accompanied by 24GB of onboard memory on the RTX 4090, making it one of the most advanced in the industry.”

Nvidia’s RTX 4080 boards come in two configurations, both arriving in November. The base model RTX 4080 has 12GB of GDDR6X memory and sells for $899, while a 16GB version costs $1,199. “However, Nvidia will only sell a Founders Edition model of the more expensive model. For the 12GB version, you’ll need to look to the company’s partners, which may make it hard to find models that actually start at $899,” Engadget says.

Nvidia’s new fully managed, cloud-powered AI services are aimed at enterprise software developers, making it “easier to adapt LLMs and deploy AI-powered apps for a range of use cases including text generation and summarization, protein structure prediction and more,” reports TechCrunch, explaining the new services leverage Nvidia’s NeMo, “an open source toolkit for conversational AI, and they’re designed to minimize — or even eliminate — the need for developers to build LLMs from scratch.”

Among Nvidia’s numerous and varied GTC conference announcements is Drive Thor, “one chip to rule all software-defined vehicles,” according to TechCrunch, which says this next-gen automotive-grade chip “will be able to unify a wide range of in-car technology from automated driving features and driver monitoring systems to streaming Netflix in the back for the kiddos.”

No Comments Yet

You can be the first to comment!

Sorry, comments for this entry are closed at this time.