Mozilla Intros Open-Source Speech Recognition, Voice Dataset

December 5, 2017

Mozilla unveiled Project DeepSpeech and Project Common Voice to leverage the capabilities of speech recognition. The company says it has just reached “two important milestones” in the project out of its Machine Learning Group. Mozilla is releasing its open source speech recognition model, which it states is nearly as accurate as what humans can perceive from the same recordings, and is also unveiling the world’s second largest publicly available voice dataset, with contributions by almost 20,000 people around the world.

On its blog, Mozilla reports that, “there are only a few commercial quality speech recognition services available, dominated by a small number of large companies,” which “reduces user choice and available features for startups, researchers or even larger companies that want to speech-enable their products and services.”

This fact is the rationale behind launching DeepSpeech as an open source project. DeepSpeech, which is based on “sophisticated machine learning techniques and a variety of innovations,” has built a “speech-to-text engine that has a word error rate of just 6.5 percent on LibriSpeech’s test-clean dataset.”

The initial release includes “pre-built packages for Python, Node,js and a command-line binary that developers can use right away to experiment with speech recognition.” The blog explains that the lack of “high quality, transcribed voice data” is what hinders the development of more “commercially available” services.

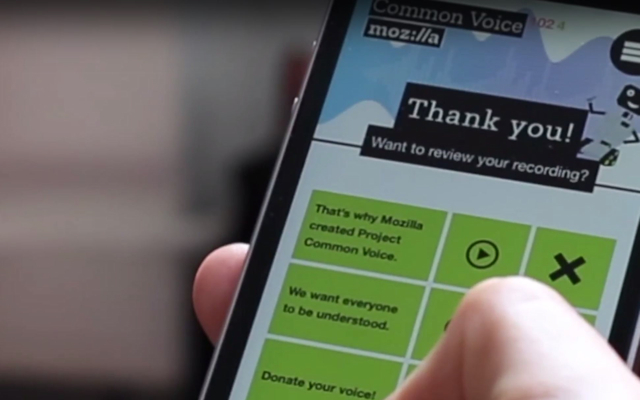

To that end, Mozilla’s Project Common Voice, which debuted in July, aims to “make it easy for people to donate their voices to a publicly available database, and in doing so build a voice dataset that everyone can use to train new voice-enabled applications.”

Mozilla released nearly 400,000 recordings representing 500 hours of speech in this “first tranche of donated voices.” Contributions from more than 20,000 people came from all over the world, thus “reflecting a diversity of voices globally” including different accents. It notes that many services understand men better than women, a reflection of “biases within the data on which they are trained.”

Although Common Voice started with English, the goal is to “support voice donations in multiple languages beginning in the first half of 2018.”

No Comments Yet

You can be the first to comment!

Sorry, comments for this entry are closed at this time.